重现步骤

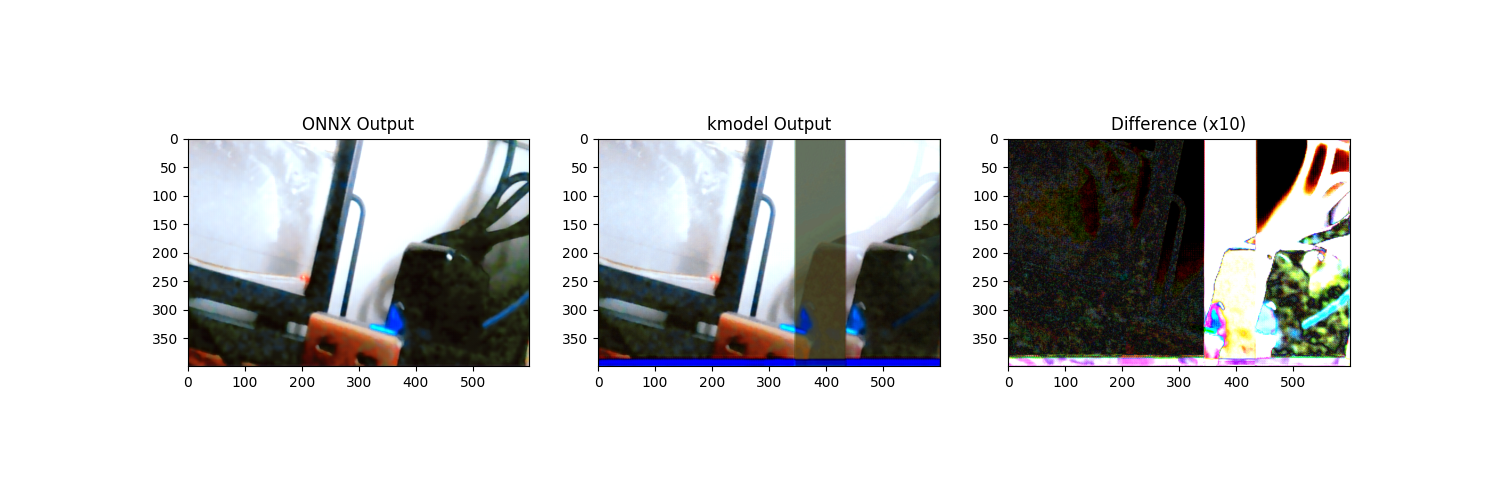

设计了一个图像增强模型,onnx模型推理正确,通过onnx转换为kmodel,在模拟器上推理出现错误色块

(可以提供原始onnx模型)

期待结果和实际结果

kmodel应当能够正确输出图像数据

软硬件版本信息

nncase v2.9.0

错误日志

尝试解决过程

补充材料

测试代码:

import os

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

from nncase_base_func import *

import onnxruntime # 导入onnxruntime库用于ONNX模型推理

dump_path = "./tmp_onnx"

kmodel_path = "./tmp_onnx/test.kmodel"

onnx_path = "./model_dynamic_sim.onnx" # ONNX模型路径

npy_path = "input_data.npy" # 新增的 npy 文件路径

# 添加运行ONNX模型的函数

def run_onnx_model(onnx_path, input_data):

print(f"Running ONNX model: {onnx_path}")

# 创建ONNX运行时会话

session = onnxruntime.InferenceSession(onnx_path)

# 获取输入名称

input_name = session.get_inputs()[0].name

# 准备输入数据

input_data = input_data.astype(np.float32) / 255.0 # 归一化到[0, 1]范围

# 运行模型推理

result = session.run(None, {input_name: input_data})

# 将输出转换回[0, 255]范围

result_scaled = [r * 255.0 if r.max() <= 1.0 else r for r in result]

return result_scaled

# 加载输入数据

input_data = np.load(npy_path) # 形状 (1,3, 400, 600)

print(f"Input data shape: {input_data.shape}")

# 确保dump_path目录存在

os.makedirs(dump_path, exist_ok=True)

# 运行ONNX模型

onnx_result = run_onnx_model(onnx_path, input_data)

print("ONNX model inference completed")

# 保存ONNX模型输出

for idx, output in enumerate(onnx_result):

print(f"ONNX output {idx} shape: {output.shape}")

output.tofile(os.path.join(dump_path, f"onnx_result_{idx}.bin"))

# 保存为图像

output_image = output.squeeze().transpose(1, 2, 0).astype(np.uint8)

output_image = Image.fromarray(output_image)

output_image.save(os.path.join(dump_path, f"onnx_result_{idx}.png"))

print(f"Saved ONNX result to {os.path.join(dump_path, f'onnx_result_{idx}.png')}")

# 运行kmodel

print(f"Running kmodel: {kmodel_path}")

result = run_kmodel(kmodel_path, input_data)

print("kmodel inference completed")

# 保存kmodel输出和比较结果

for idx, output in enumerate(result):

print(f"kmodel output {idx} shape: {output.shape}")

output.tofile(os.path.join(dump_path, f"nncase_result_{idx}.bin"))

# 保存为图像

output_image = output.squeeze().transpose(1, 2, 0).astype(np.uint8)

output_image = Image.fromarray(output_image)

output_image.save(os.path.join(dump_path, f"nncase_result_{idx}.png"))

print(f"Saved kmodel result to {os.path.join(dump_path, f'nncase_result_{idx}.png')}")

# 计算与ONNX模型输出的差异

if idx < len(onnx_result):

diff = np.abs(output - onnx_result[idx])

mean_diff = np.mean(diff)

max_diff = np.max(diff)

print(f"Output {idx} - Mean difference: {mean_diff:.4f}, Max difference: {max_diff:.4f}")

# 可视化差异

plt.figure(figsize=(15, 5))

plt.subplot(1, 3, 1)

plt.title("ONNX Output")

plt.imshow(onnx_result[idx].squeeze().transpose(1, 2, 0).astype(np.uint8))

plt.subplot(1, 3, 2)

plt.title("kmodel Output")

plt.imshow(output.squeeze().transpose(1, 2, 0).astype(np.uint8))

plt.subplot(1, 3, 3)

plt.title("Difference (x10)")

plt.imshow(np.clip(diff.squeeze().transpose(1, 2, 0) * 10, 0, 255).astype(np.uint8))

plt.savefig(os.path.join(dump_path, f"comparison_{idx}.png"))

print(f"Saved comparison visualization to {os.path.join(dump_path, f'comparison_{idx}.png')}")

模型转换配置:

compile_options = nncase.CompileOptions()

compile_options.target = "k230" #"cpu"

compile_options.dump_ir = True # if False, will not dump the compile-time result.

compile_options.dump_asm = True

compile_options.dump_dir = dump_path

compile_options.input_file = ""

# preprocess args

compile_options.preprocess = True

if compile_options.preprocess:

compile_options.input_type = "uint8" # "uint8" "float32"

compile_options.input_shape = [1,3,400,600]

#compile_options.input_shape = [1,400,600,3]

compile_options.input_range = [0,255]

compile_options.input_layout = "NCHW" # "NHWC"

compile_options.swapRB = False

compile_options.mean = [0,0,0]

compile_options.std = [255,255,255]

compile_options.letterbox_value = 0

compile_options.output_layout = "NCHW" # "NHWC"

# quantize options

ptq_options = nncase.PTQTensorOptions()

ptq_options.quant_type = "uint8" # datatype : "float32", "int8", "int16"

ptq_options.w_quant_type = "uint8" # datatype : "float32", "int8", "int16"

ptq_options.calibrate_method = "NoClip" # "Kld"

ptq_options.finetune_weights_method = "NoFineTuneWeights"

ptq_options.dump_quant_error = False

ptq_options.dump_quant_error_symmetric_for_signed = False

# mix quantize options

# more details in docs/MixQuant.md

ptq_options.quant_scheme = ""

ptq_options.quant_scheme_strict_mode = False

ptq_options.export_quant_scheme = False

ptq_options.export_weight_range_by_channel = False

############################################

ptq_options.samples_count = len(calib_data[0])

ptq_options.set_tensor_data(calib_data)